- #DO WE NEED TO INSTALL APACHE SPARK HOW TO#

- #DO WE NEED TO INSTALL APACHE SPARK INSTALL#

- #DO WE NEED TO INSTALL APACHE SPARK DOWNLOAD#

- #DO WE NEED TO INSTALL APACHE SPARK MAC#

#DO WE NEED TO INSTALL APACHE SPARK DOWNLOAD#

To do that go to this page and download the latest version of the JDK.

#DO WE NEED TO INSTALL APACHE SPARK INSTALL#

However, in this guide we will install JDK.

Note: you don’t need any prior knowledge of the Spark framework to follow this guide.įirst, we need to install Java to execute Spark applications, note that you don’t need to install the JDK if you want just to execute Spark applications and won’t develop new ones using Java.

#DO WE NEED TO INSTALL APACHE SPARK HOW TO#

Using Spark’s default log4j profile: org/apache/spark/log4j-defaults.propertiesĢ1/09/16 13:54:59 INFO HistoryServer: Started daemon with process name: Ģ1/09/16 13:54:59 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicableĢ1/09/16 13:55:00 INFO SecurityManager: Changing view acls to: sindhuĢ1/09/16 13:55:00 INFO SecurityManager: Changing modify acls to: sindhuĢ1/09/16 13:55:00 INFO SecurityManager: Changing view acls groups to:Ģ1/09/16 13:55:00 INFO SecurityManager: Changing modify acls groups to:Ģ1/09/16 13:55:00 INFO SecurityManager: SecurityManager: authentication disabled ui acls disabled users with view permissions: Set(sindhu) groups with view permissions: Set() users with modify permissions: Set(sindhu) groups with modify permissions: Set()Ģ1/09/16 13:55:00 INFO FsHistoryProvider: History server ui acls disabled users with admin permissions: groups with admin permissionsĢ1/09/16 13:55:00 INFO Utils: Successfully started service on port 18080.Ģ1/09/16 13:55:00 INFO HistoryServer: Bound HistoryServer to 0.0.0.0, and started at Įxception in thread “main” java.io.FileNotFoundException: Log directory specified does not exist: file:/tmp/spark-events Did you configure the correct one through .logDirectory?Īt .(FsHistoryProvider.scala:280)Īt .(FsHistoryProvider.scala:228)Īt .(FsHistoryProvider.scala:410)Īt .history.HistoryServer$.main(HistoryServer.scala:303)Īt .(HistoryServer.scala)Ĭaused by: java.io.FileNotFoundException: File file:/tmp/spark-events does not existĪt .precatedGetFileStatus(RawLocalFileSystem.java:611)Īt .RawLocalFileSystem.getFileLinkStatusInternal(RawLocalFileSystem.java:824)Īt .RawLocalFileSystem.getFileStatus(RawLocalFileSystem.java:601)Īt .FilterFileSystem.getFileStatus(FilterFileSystem.java:428)Īt .(FsHistoryProvider.This guide is for beginners who are trying to install Apache Spark on a Windows machine, I will assume that you have a 64-bit windows version and you already know how to add environment variables on Windows. Please let me know what am i doing wrong. I have set the variables as mentioned below in nf yet im getting the exception finle not found /tmp/spark-events. If you have any issues, setting up, please message me in the comments section, I will try to respond with the solution.

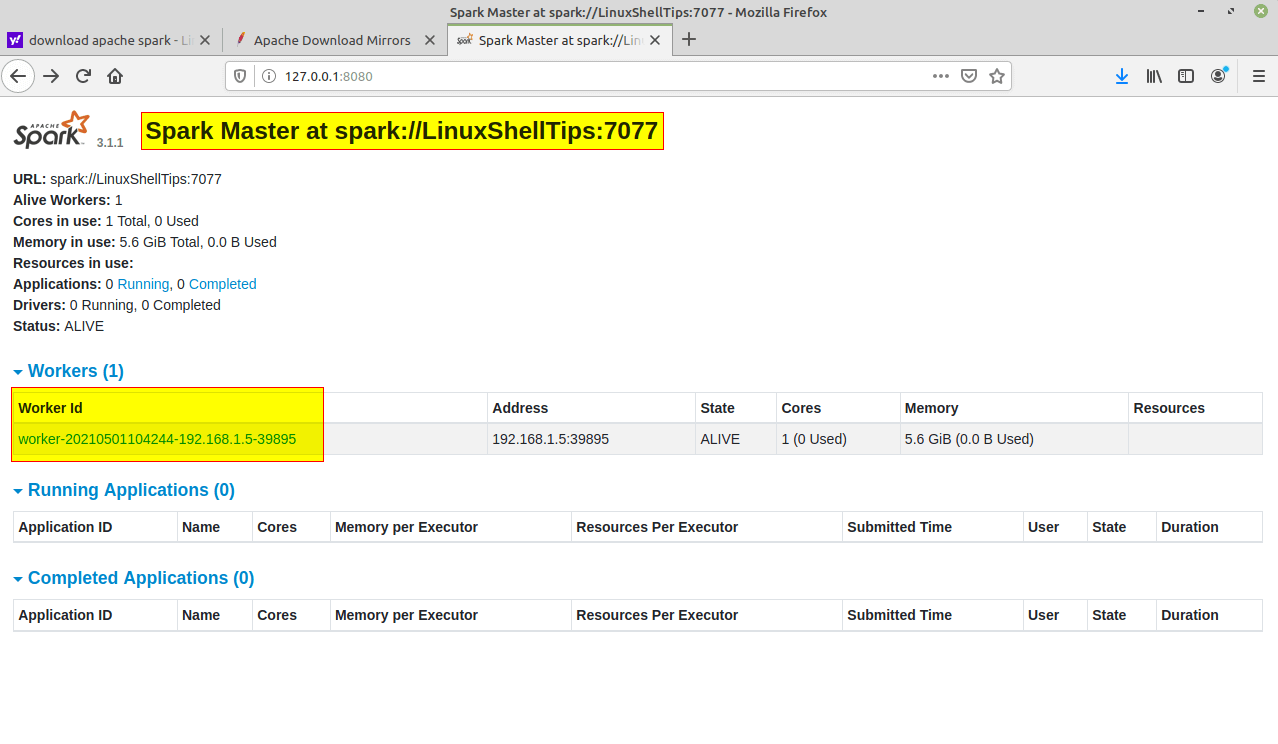

In summary, you have learned how to install PySpark on windows and run sample statements in spark-shell $SPARK_HOME/bin/spark-class.cmd .history.HistoryServerīy default, History server listens at 18080 port and you can access it from the browser using History Serverīy clicking on each App ID, you will get the details of the application in PySpark web UI. If you are running PySpark on windows, you can start the history server by starting the below command.

#DO WE NEED TO INSTALL APACHE SPARK MAC#

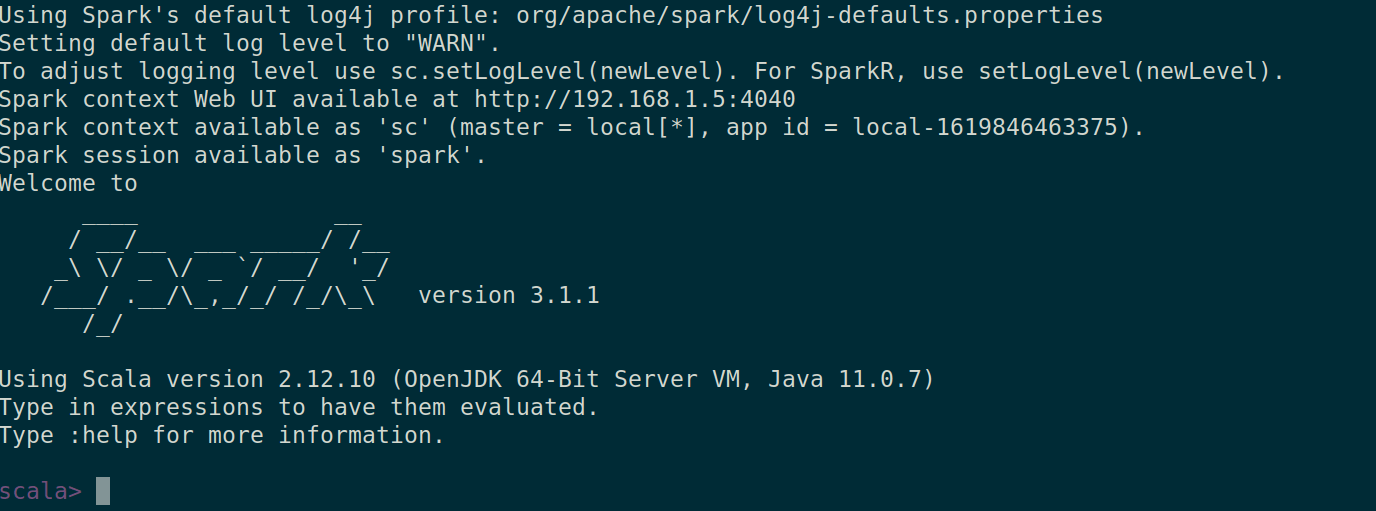

Now, start the history server on Linux or Mac by running. before you start, first you need to set the below config on nf History servers, keep a log of all PySpark applications you submit by spark-submit, pyspark shell. Spark-shell also creates a Spark context web UI and by default, it can access from Web UIĪpache Spark provides a suite of Web UIs (Jobs, Stages, Tasks, Storage, Environment, Executors, and SQL) to monitor the status of your Spark application. You should see something like this below. Now open the command prompt and type pyspark command to run the PySpark shell. Winutils are different for each Hadoop version hence download the right version from PySpark shell PATH=%PATH% C:\apps\spark-3.0.0-bin-hadoop2.7\binĭownload winutils.exe file from winutils, and copy it to %SPARK_HOME%\bin folder. Now set the following environment variables.

After download, untar the binary using 7zip and copy the underlying folder spark-3.0.0-bin-hadoop2.7 to c:\appsģ.

0 kommentar(er)

0 kommentar(er)